We’re Fly.io, we’re a new public cloud that lets you put your compute where it matters: near your users. Today we’re announcing that you can do this with GPUs too, allowing you to do AI workloads on the edge. Want to find out more? Keep reading.

AI is pretty fly

AI is apparently a bit of a thing (maybe even an thing come to think about it). We’ve seen entire industries get transformed in the wake of ChatGPT existing (somehow it’s only been around for a year, I can’t believe it either). It’s likely to leave a huge impact on society as a whole in the same way that the Internet did once we got search engines. Like any good venture-capital funded infrastructure provider, we want to enable you to do hilarious things with AI using industrial-grade muscle.

Fly.io lets you run a full-stack app—or an entire dev platform based on the Fly Machines API—close to your users. Fly.io GPUs let you attach an Nvidia A100 to whatever you’re building, harnessing the full power of CUDA with more VRAM than your local 4090 can shake a ray-traced stick at. With these cards (or whatever you call a GPU attached to SXM fabric), AI/ML workloads are at your fingertips. You can recognize speech, segment text, summarize articles, synthesize images, and more at speeds that would make your homelab blush. You can even set one up as your programming companion with your model of choice in case you’ve just not been feeling it with the output of other models changing over time.

If you want to find out more about what these cards are and what using them is like, check out What are these “GPUs” really? It covers the history of GPUs and why it’s ironic that the cards we offer are called “Graphics Processing Units” in the first place.

Fly.io GPUs in Action

We want you to deploy your own code with your favorite models on top of Fly.io’s cloud backbone. Fly.io GPUs make this really easy.

You can get a GPU app running Ollama (our friends in text generation) in two steps:

Put this in your

fly.toml:app = "sandwich_ai" primary_region = "ord" vm.size = "a100-40gb" [build] image = "ollama/ollama" [mounts] source = "models" destination = "/root/.ollama" initial_size = "100gb"Run

fly apps create sandwich_ai && fly deploy.

If you want to read more about how to start your new sandwich empire, check out Scaling Large Language Models to zero with Ollama, it explains how to set up Ollama so that it automatically scales itself down when it’s not in use.

The speed of light is only so fast

Being able to spin up GPUs is great, but where Fly.io really shines is inference at the edge.

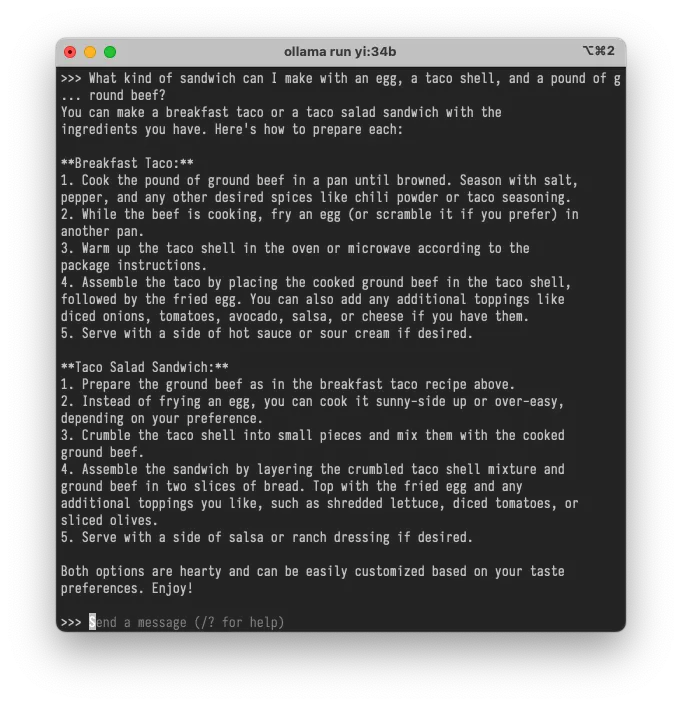

Let’s say you have an app that lets users enter ingredients they have in their kitchen and receive a sandwich recipe. Your users expect their recipes instantly (or at least as fast as the other leading apps). Seconds count when you need an emergency sandwich.

It’s depressingly customary in the AI industry to cherry-pick outputs. This was not cherry-picked. I used yi:34b to generate this recipe. I’m not sure what a taco salad sandwich is, but I might be willing to try it.

In the previous snippet, we deployed our app to ord (primary_region = "ord"). The good news is that our model returns a result really quickly and users in Chicago get instant sandwich recipes. It’s a good experience for users near your datacentre, and you can do this on any half decent cloud provider.

But surely people outside of Chicago need sandwiches too. Amsterdam has sandwich fiends as well. And sometimes it takes too long to have their requests leap across the pond. The speed of light is only so fast after all. Don’t worry, we’ve got your back. Fly.io has GPUs in datacentres all over the world. Even more, we’ll let you run the same program with the same public IP address and the same TLS certificates in any regions with GPU support.

Don’t believe us? See how you can scale your app up in Amsterdam with one command:

fly scale count 2 --region ams

It’s that easy.

Actually On-Demand

GPUs are powerful parallel processing packages, but they’re not cheap! Once we have enough people wanting to turn their fridge contents into tasty sandwiches, keeping a GPU or two running makes sense. But we’re just a small app still growing our user base while also funding the latest large sandwich model research. We want to only pay for GPUs when a user makes a request.

Let’s open up that fly.toml again, and add a section called services, and we’ll include instructions on how we want our app to scale up and down:

[[services]]

internal_port = 8080

protocol = "tcp"

auto_stop_machines = true

auto_start_machines = true

min_machines_running = 0

Now when no one needs sandwich recipes, you don’t pay for GPU time.

The Deets

We have GPUs ready to use in several US and EU regions and Sydney. You can deploy your sandwich, music generation, or AI illustration apps to:

- Ampere A100s with 40gb of RAM for $2.50/hr

- Ampere A100s with 80gb of RAM for $3.50/hr

- Lovelace L40s are coming soon (update: now here!) for $2.50/hr

By default, anything you deploy to GPUs will use eight heckin’ AMD EPYC CPU cores, and you can attach volumes up to 500 gigabytes. We’ll even give you discounts for reserved instances and dedicated hosts if you ask nicely.

We hope you have fun with these new cards and we’d love to see what you can do with them! Reach out to us on X (formerly Twitter) or the community forum and share what you’ve been up to. We’d love to see what we can make easier!