We’re Fly.io. React, Phoenix, Rails, whatever you use: we put your code into lightweight microVMs on our own hardware in 26 cities and counting. Check us out—your app can be running close to your users within minutes.

Imagine this: you have invented the best design tool since Figma. But before you can compete with the design-industry heavyweight, you need to be able to compete on one of Figma’s main propositions: real-time collaboration. You do some research and find that this is an upsettingly hard problem.

You start with a single server that coordinates users’ changes for each document, using something like Replicache (which we’ll come back to in a sec). Clients connect over WebSockets. But your business grows, and that one server gets overloaded with connections.

You’ll have to split your documents among servers, but that means routing connections for each document to the server that has it. What now? Write complex networking logic to correctly route connections? “There has to be a better way,” you say to yourself.

“There is!” I say, popping out of your computer!

OK, I may have passed through “conversational writing style” right into “witchcraft.” Let’s reel it back in.

I’m going to demonstrate what I think is a good solution for the problem of a distributed real-time backend, using Replicache, Fly Machines and a Fly.io feature we’ve talked about before: the Fly-Replay header.

Our demo app for today is—drum roll—a real-time collaborative todo app!

Replicache: an aside

Replicache is a “JavaScript framework for building high-performance, offline-capable, collaborative web apps.” It handles the hard parts of collaboration tech for us: conflicts, schema migrations, tabs, all the good (and bad) stuff.

Unfortunately for us, for all that Replicache is super good at, it does not use WebSockets. But Websockets are perfect for frequent bidirectional updates to a document! Thankfully, using the Power of Software™ we can just make Replicache bend to our WebSockety will.

The protocol that Replicache uses can be described in three parts: a push endpoint that clients can use to push changes to the server, a pull endpoint the client can use to pull changes made since the last time it pulled, and a persistent connection (usually either WebSockets or Server Sent Events) to poke clients and let them know something has changed so they should pull. If the protocol requires a persistent connection anyway, we should make everything work over that connection.

Don’t do what I am about to describe in production without some serious thought/tuning. While the way I do things totally works, I also have not tested it beyond the confines of this basic demo, so like, proceed with caution.

Building on replicache-express which implements the push, pull, and poke endpoints, I added a new endpoint for opening a WebSocket connection to a given “room”; in this case a room is just a given document.

Here is the (slightly simplified) flow that happens when a WebSocket connection is opened via /api/replicache/websocket/:spaceID:

- The connection is opened.

- The server responds with

{"data":"hello"}. - The WebSocket connection is stored in a map on the server with other WebSocket connections for that given

spaceID.

- The server responds with

- The client sends a message like

{messageType: "hello", clientID: "4b9e0797-8321-4c03-ba82-01edafdeca0d"}.- The server responds with the complete state it has stored to sync the local Replicache state on the client.

- When the client sends a change the server processes it, stores it, and then sends the updates to all clients subscribed to the

spaceIDwhere things were changed.

The actual process is slightly more nuanced to prevent sending more over the wire than we really have to (like updates we know clients have already seen). Every time we send a message to a given client we remember that we sent it. This way, the next time we need to update the client we can just send it what has changed and not the whole document. If you are curious how the server sync logic works, the code is available here.

This whole process is implemented on the client basically in the inverse by overriding the Replicache client’s push and pull logic to use our persistent WebSocket connection. When the frontend receives a message it stores the changes from the message in a temporary array, then instructs the Replicache client to “pull”. The trick is that we override the pull logic from the Replicache client to just read straight from the array we’ve been storing websocket messages in.

puller: async () => {

const res: PullerResult = {

httpRequestInfo: {

httpStatusCode: 200,

errorMessage: ""

},

response: {

cookie,

patch,

lastMutationID

}

}

patch = []

return res

}

We similarly override the push functionality to write the message over our websocket instead of HTTP.

pusher: async req => {

const res: HTTPRequestInfo = {

httpStatusCode: 200,

errorMessage: ""

}

socket.send(JSON.stringify(await req.json()))

return res

},

Our architecture

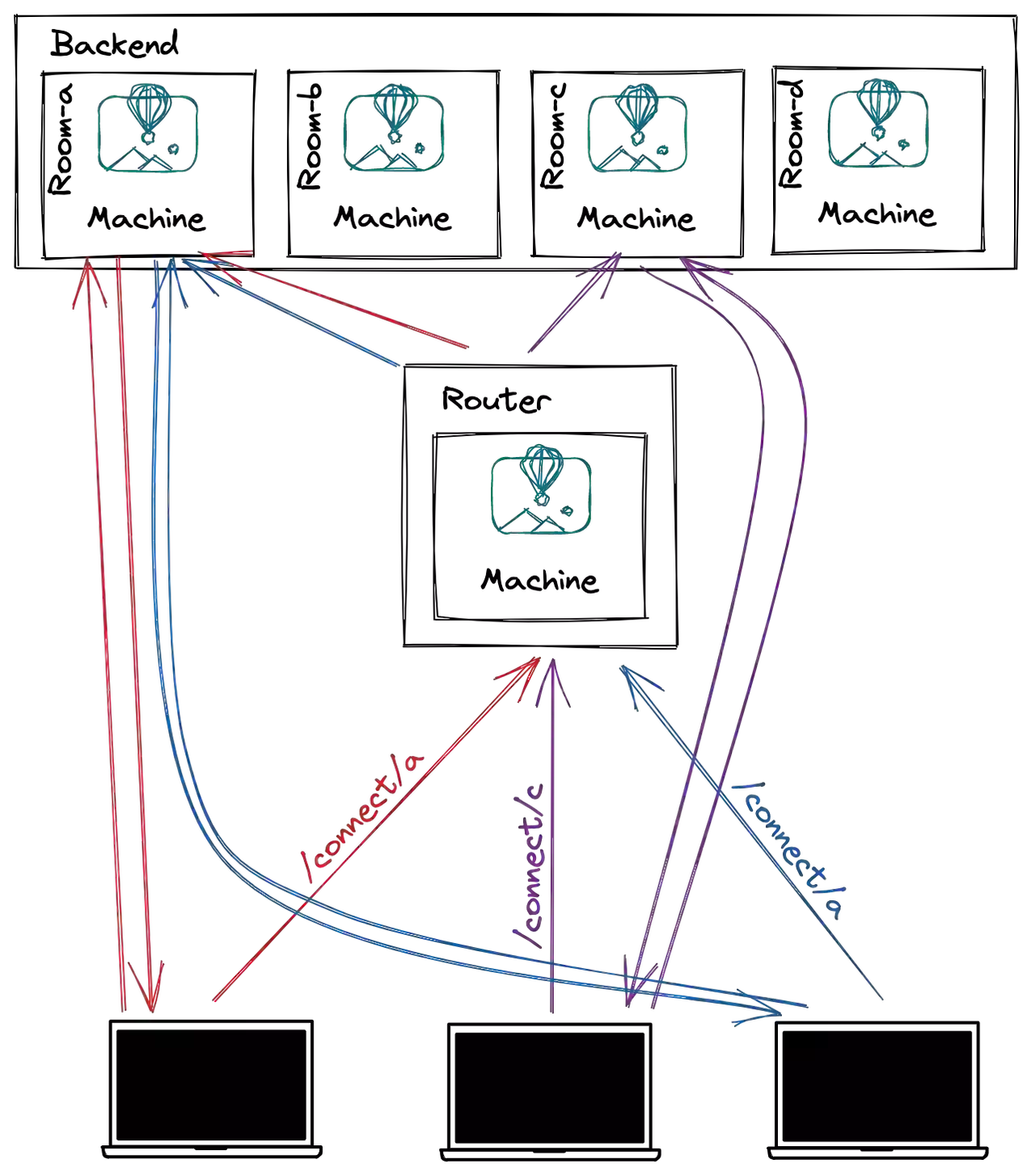

Let’s take a look at the architecture diagram for this demo.

The Replicache WebSocket server we just talked about is what we can see running on each Fly machine in the backend section. For the sake of the Figma-like app example, we can think of each machine running the backend for a given design document. You can see them named room(a-d).

We can see something cool happening, though: instead of our clients having to know where to find the specific backend server where the document they want lives, all the clients connect via the same router. The router is the super cool part of this app.

The router

Let’s think for a second about what our router has to do:

- Know the address of the server the user’s stuff is on

- Somehow connect the user to that server

For the sake of this demo, we will consider requirement 1. out of scope and have our router use hardcoded values.

For requirement 2., we just need to help the client find the right backend machine for its document when it makes a request for a WebSocket connection upgrade; the client and the backend can establish their connection and communicate directly from then on.

We’ll be running the backend servers as Fly Machines on the same Fly.io private network as the router app, which means we can use a super-cool feature called fly-replay to send a connection request on to a given VM.

fly-replay is a special header that fly-proxy, the aptly named Fly.io proxy, understands, that can be used to replay a request somewhere else inside your private network. For instance, to replay a request in region sjc your app’s response could include the header fly-replay: region=sjc. You can also do fly-replay: app=otherapp;instance=instanceid, to target a specific VM. This is what we’ll use in our router.

Putting it all together

I unfortunately am not going to make the Next Best Thing Since Figma in this post for the sake of example, but I built something almost as good: a collaborative real-time todo list based on this demo from Replicache.

Our todo list has three layers (directions to actually run these yourself can be found here):

- The frontend: a simple React app that connects to…

- The router: a simple Express server that uses fly-replay to replay the WebSocket request against the right instance of…

- The backend: the Replicache-meets-WebSockets thing we talked about before.

For the backend we just have a pool of Fly machines, each one responsible for a single todo list. Each todo list will be identified by the id of the machine it runs on.

So when a client connects to /api/replicache/websocket/machineID on the router, the router responds with fly-replay: app=replicache-backend;instance=machineID. This response is handled by fly-proxy, who replays the request against the appropriate backend machine, and voilà: the client is now directly talking to the right backend machine.

We now have a way over-engineered todo list(s): each list runs on its own VM, and a simple router leveraging fly-replay sends WebSocket connections to the correct backend.

You can check out the demo for yourself at https://replicache-frontend.fly.dev. You’ll see a list of clickable IDs. Each ID is a machine running an instance of the backend which corresponds to an individual todo list. If you are so inclined to set this demo up for yourself, the code and instructions are here.