Fly.io empowers you to run apps globally, close to your users. If you want to ship a Laravel app, try it out on Fly.io. It takes just a couple of minutes.

I’ve been on the search for a remote development setup for the better part of 11 years.

Originally this was due to falling in love with an underpowered Macbook Air. Now I’m older and impatient - I’m opting out of dependency hell.

Recently, I landed on something that I really enjoy. It’s fast, and the trade-offs (there are always trade offs!) are ones I don’t mind.

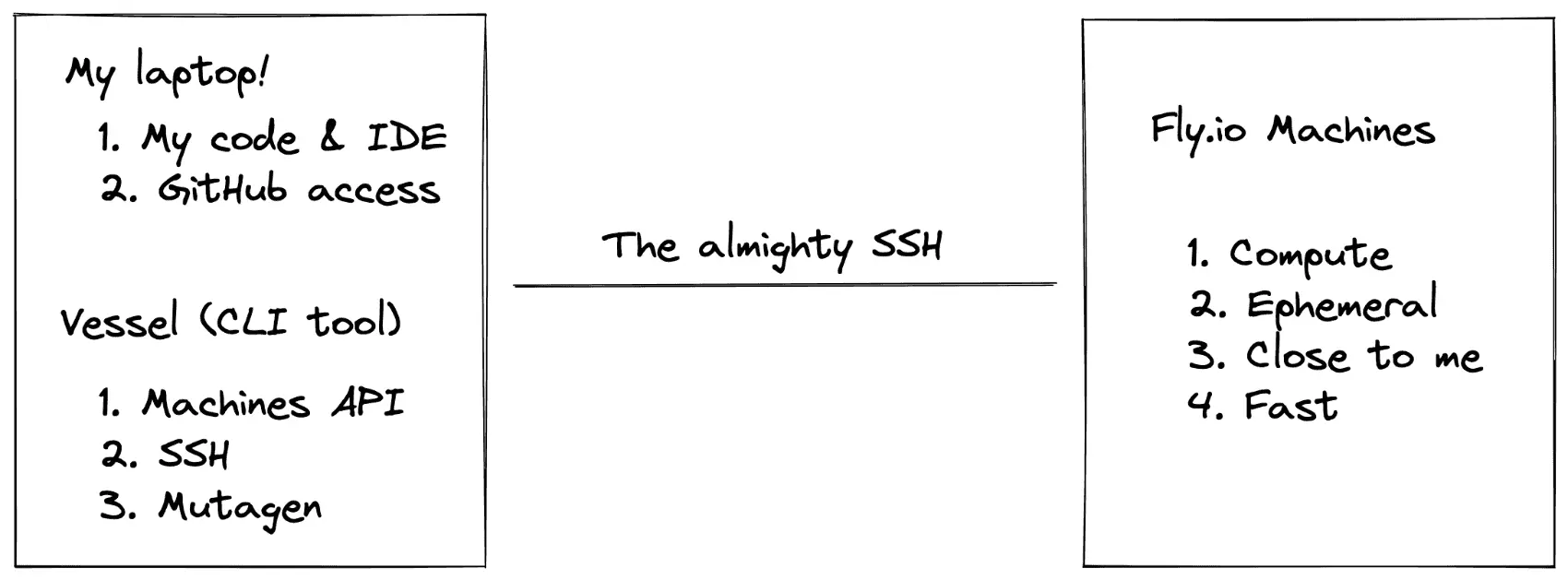

The main “thing” that I like about this is that I sync local files into the remote machine. This way my IDE, git config, code (etc) lives on my machine, but I can use a fast, ephemeral VM for compute.

The reason this all works is because Fly Machines (and a guy named Amos) are rad as heck. I wrapped up the Machines API and a few other technical details into Vessel, a CLI tool to make dev environments for me.

To see how this is all put together, read on here! You can also check out my Laracon lightning talk on Vessel.

The State of Things

The remote dev dream is often paired with the word “just”. Just spin up a VM somewhere! Just automate it! What could a banana cost? $10?

Remote dev via some VM in the cloud is death by a million paper cuts. The VM is often the source of truth, containing your codebase, git repository, SSH access to git repos, etc. They easily grow into a trash fire of sloppily installed and configured software.

There’s just…friction when you’re not developing locally.

There’s hope, though

I actually think we’re early in a growing remote development “movement”.

Within the last few years, more modern solutions have come about!

A well known solution right now is Gitpod. In their model, your code is on their machines alongside their compute “stuff” (the programming language and version you need, etc).

Gitpod, however, doesn’t gel with me. I have to use VSCode in the browser, or setup its SSH agent. If you prefer Jetbrains IDE’s, you need to install and configure their Gateway.

Additionally, your code (the source of truth™) lives in their servers. You “git push…” from within their servers. This isn’t necessarily an issue (presumably they have permission to push to your repository via their oAuth applications, alleviating worry about SSH access management), but 3rd party push access to my code is not my favorite.

What I do like about GitPod is that the environments are ephemeral. This is painful at first, but having a forcing function to make you automate the creation of an environment to be productive quickly ends up being a huge win.

It’s harder for you to muck up the place with ill-conceived stack-overflow-sourced apt-get commands!

What I Want

Ideally my development environment feels local, but the compute is remote.

I already have my stuff setup and configured locally, just as I like it. Git (and git aliases), SSH keys, IDE’s, code - it’s all local and configured just-so for me. I just don’t want to handle my projects dependencies - flavors and versions of various programming languages, required server dependencies, and so on.

In 2022, there should be technical solutions to make this happen. The closest I’ve come so far is with Fly.io’s Machines API.

What We Need

After a bit of thought (and nixing some totally dumb ideas), a basic setup that still feels satisfying only has 3 requirements:

- Fast(ish) internet and geographically close compute - to keep latency low

- Fast file syncing - since I want my source of truth to be local

- Security - this shouldn’t be publicy accessible

Seems simple enough! I decided to see if I could find solutions to all of these without too much headache.

Close to Me (and You)

Here’s the simplest requirement met: Fly.io’s tagline is “serve apps close to your users”. I can throw some compute into a LOT of regions!

This was the first thing that made me want to try a remote-dev experiment out. Lots of cloud providers have plenty of regions, but Fly.io has a lot of them. Launching a VM into any given region is, intentionally, very low friction.

Geographically close compute means reduced latency for everything else we need to do.

Fast File Syncing

We have the compute, but we still need file syncing and some network forwarding. Mutagen handles both of these nicely. It uses SSH to do the exact 2 things we need:

- File synchronization

- Network forwarding

These are Mutagen’s 2 main features.

File syncing is pretty obvious - it’ll sync files over SSH. I’ll get into what that looks like in a bit.

Security via Network Forwarding

I’m using “security” with heavy quotes around it. Basically I just don’t want the dev environment to be publicly reachable.

Mutagen handles network forwarding over SSH. This provides the “security”!

Network forwarding lets me load localhost:8000 in my browser to reach a web app I’m hacking on. No one else can use their browser to reach the dev environment this way.

I’m using a web application as an example, but you’re not limited to HTTP. You can forward any ports you want! I think the only limitation is that it needs to be a TCP connection instead of UDP.

Machines, and all apps in Fly.io, can be accessed over their private network via a WireGuard VPN. If you need that level of security, you could have absolutely nothing listening for public connections, and connect yourself via VPN.

The “Machina”

So what’s the deal with Machines?

Machines are basically version 2 of Fly.io’s app platform. Eventually everything will be Machines and we can maybe stop calling them Machines and “v2”.

Machines have some interesting behaviors.

First, the mundane (but important): We can manage Machines via an API (there’s even a Terraform provider). This means we can automate the lifecycle of a development environment, which is great although still a bit more work than I want to do.

Here’s something more interesting: Machines can be stopped. Not only that, but they’ll stop automatically if a program exits with status code of zero. I don’t need to send any API calls to stop a machine.

Since you’re not billed for compute time you don’t use, this is great.

Another interesting thing: Machines can start back up in just a few milliseconds. They run on Firecracker, just like AWS’s Lambda. Stopped machines are essentially “paused” and startup time is quick.

On top of that, Fly’s proxy layer will start a machine on network access. Just make a request to the machine! Yet another API call I don’t need to make.

So we have some really useful building blocks on which to make a development environment, with minimal effort.

Automatically Stopping Machines

Amos, who works on the Fly Proxy (the routing layer of Fly.io) wrote an article on remote development on Machines. He’s a vim user, which is a bit grim to me for development, but the article goes into detail on some really cool stuff.

Most notably, he figured out how to determine when a server isn’t in use, and shut it down. The TL;DR is that if there’s no SSH connections, the machine is considered idle and will shutdown (exit 0) after a timeout.

We can totally steal that idea and do the same.

What’s Running

We need to run stuff in our Machine that lets us track if it’s idle (listen for SSH connections) and contain our development dependencies.

If you’re not familiar with Fly.io, all you need to know is that it takes a Docker image, and converts it to a for-real virtual machine.

To get setup with a functional Docker image, there’s a few things to consider for this use case.

The first thing is that Fly.io uses port 22 (on the private network interface) by the stuff that makes fly ssh console work. To avoid conflicts, I had an SSH daemon listen port 2222.

You can see the base Docker image I used, and it’s SSH configuration, here. The ENTRYPOINT script takes care of finding a public key and adding it to authorized_keys (here).

The Docker configuration linked above is used as a base image. For my own PHP projects, I made a PHP-specific Docker image on top of that base image.

Counting Idle Time

Like mentioned, Machines will stop when your application exits with a success status code 0 . An error code will result in a restart.

Additionally, machines work just like Fly.io apps - you feed it a Docker image, and Fly.io converts it to a real VM.

We (ab)use this by having our Docker image run the (“borrowed”) Rust program that exits when it detects the machine is idle.

Amos’ article covers how to do this ad nauseam, and I 100% stole his idea like it was a hot Stack Overflow answer. Here is my almost-exact-copy of it in the base Docker image.

The main trick here is that the Docker image is configured to run that Rust program as its “main” process (the ENTRYPOINT or CMD).

Since that program is the main process running, it’s a convenient place to take care of a few other things. One task is starting the SSH daemon. The other is running…whatever else we need.

I chose to use Supervisord to help run anything else. The Rust program starts SSH, and then Supervisord. Supervisord will start whatever it’s configured to monitor.

Now, while Supervisord is installed in the base image, it actually has no configuration. However, for my PHP image, the Dockerfile uses the base image and adds Supervisord configuration to run Nginx and PHP-FPM!

Run your apps on Fly

Don’t stop at remote development. Deploy Laravel globally on Fly in minutes!

Deploy your Laravel app! →

Managing Machines

Machines conveniently let us skip some lifecycle management, but we can’t avoid writing at least some code against Fly.io’s APIs.

Let’s see what it looks like to create a Machine. The basic steps are:

- Register an App

- Run a Machine within the app

Of course, there are details!

# For the time being, we need to proxy into our

# Fly.io org private network to make API calls.

fly machine api-proxy

# Create an app named "some-app"

curl -i -X POST \

-H "Authorization: Bearer ${FLY_API_TOKEN}" \

-H "Content-Type: application/json" \

"http://${FLY_API_HOSTNAME}/v1/apps" \

-d '{"app_name": "some-app", "org_slug": "personal"}'

# Run a machine in that app

curl -i -X POST \

-H "Authorization: Bearer ${FLY_API_TOKEN}" \

-H "Content-Type: application/json" \

"http://${FLY_API_HOSTNAME}/v1/apps/some-app/machines" \

-d '{bunch of json}'

Your FLY_API_HOSTNAME value will depend on if you’re using the api-proxy, VPNed into your private network, or if you’re reading this article way later and the API is public.

There’s no surprises so far! I did cheat a bit with the {bunch of json} thing though. Here’s what that JSON looks like:

{

"name": "vessel-php",

"region": "dfw",

"config": {

"image": "vesselphp/php:8.1",

"env": {

"VESSEL_PUBLIC_KEY": "public-key-string"

},

"services": [

{

"internal_port": 2222,

"protocol": "tcp",

"ports": [

{

"port": 22

}

]

}

]

}

}

There’s a few things worth pointing out here.

First, the image used is a public Docker image, available on Docker Hub. Fly.io doesn’t currently support privately hosted Docker images, so the 2 places they can live are:

- Somewhere public (usually, but not exclusively, Docker Hub)

- Fly.io’s own registry

The Fly.io registry is used behind the scenes when deploying via fly deploy. But you can actually push to that registry yourself after creating an app. This article has a great write-up of what that looks like.

The next interesting thing is the environment variable VESSEL_PUBLIC_KEY. I configured the Docker container’s ENTRYPOINT script to add the public key to the relevant authorized_keys file, allowing SSH access.

Finally, the services block is telling Fly to expose port 22 publicly, and route it to internal port 2222, where the SSH daemon is listening for connections.

Reaching the Machine

There’s one more wrinkle there. The Machine is created within an App, but there’s no IP address assigned to it. So, while we’re telling Fly to expose port 22 for connections, we don’t have a way to reach the Machine.

Adding an IP address to an app can take one of 2 forms:

# A. Use the `fly` command

fly ips allocate-v6 -a some-app

# B. Make a call to the semi-hidden GraphQL API

# See https://api.fly.io/graphql

curl -X POST \

https://api.fly.io/graphql \

-H "Authorization: Bearer ${FLY_API_TOKEN}" \

-H "Content-Type: application/json" \

-d '{

"query": "mutation($input: AllocateIPAddressInput!) { allocateIpAddress(input: $input) { ipAddress { id address type region createdAt } } }",

"variables": { "input": { "appId": "some-app", "type": "v6" } }

}'

My ISP supports using IPv6, but not everyone is so lucky. You can assign IPv4 addresses if needed.

A Region Near You

When I created the Machine, I defined what region to create it in. You can use the GraphQL API to get your closest region. What’s neat is that requests to Fly.io’s infrastructure goes to the nearest “edge” location. From that, the region closest to is calculated. So, we just need to make an API call:

curl -X POST \

https://api.fly.io/graphql \

-H "Content-Type: application/json" \

-d '{"query": "query { nearestRegion { code name gatewayAvailable } }"}'

After that, we should be able to connect to the machine over SSH!

There’s a few tidbits to know about networking with a Machine:

- You can run multiple Machines within an App

- IP addresses are assigned at the App-level, NOT to a specific Machine

- The Fly Proxy will load balance requests across Machines running within an App. It takes the port into account, so multiple machines listening on Port 22 means you can’t be sure which will be connected to. To ensure you reach a specific machine, you’d need to connect via VPN and use hostname

<machine-id>.vm.<app-name>.internal.

Syncing and Forwarding

The last-ish piece of the puzzle is integrating Mutagen into the workflow.

My first stab at this was to pull Mutagen into my own Golang project and programmatically manage it.

The structure of Mutagen’s code base made it awkward to manage its background agent, so I copied what other OS projects do and shell out to Mutagen.

There are 2 commands used to start a development session - one to start file syncing, and one to do network forwarding.

They look a bit like this:

# Sync current directory "." to /var/www/html in the server

# An entry in ~/.ssh/config named "ssh-alias" helps us with SSH details

mutagen sync create \

--ignore-vcs \

-i node_modules \

--name "my-session" \

--sync-mode two-way-resolved \

. ssh-alias:/var/www/html

# Forward localhost:8000 to localhost:80 in the Machine

mutagen forward create \

--name "my-session" \

tcp:127.0.0.1:8000 ssh-alias:127.0.0.1:80

Combined, you get file syncing, and the ability to open your browser to localhost:8000 and see your app being served (assuming an HTTP server is listening on port 80 in the Machine).

Syncing is fast too! This is due to both decent quality internet and the proximity to a region. From San Antonio (where I am) to Dallas (the nearest Fly.io region), I was getting application responses back in just ~30-40ms.

Vessel

I Made a Thing™ to wrap up all this functionality so it’s just a few commands to create and run a remote development environment.

It will grab your Fly API token, and create an App/Machine for you, and then start syncing/forwarding for you as needed.

I did a quick lightning talk at Laracon Online showing this off if you want to check it out.

It looks a bit like this:

# Install Vessel

curl https://vessel.fly.dev/install.sh | sh

# Add it to your $PATH as instructed, or use ~/.vessel/bin/vessel

# Grab or provide your Fly API token

vessel auth

# Head to your project and init

cd ~/code/my-project

vessel init

# Run through the init steps...it will create a dev environment

# Start a development session. This is a long-lived session.

# ctrl+c will clean up and stop the dev session

vessel start

The project has a few repos:

vessel-cliis the main thingvessel-runis the base Docker image with SSH and the Rust programvessel-run-phpis the Laravel-ready Docker images for PHP 7.4/8.0/8.1vessel-installeris a little web app that provides the installer shell script

You can use the vessel-run base image (vesselapp/base:latest) and add whatever you want to your own Docker images. If you push an image of your own up to Docker Hub, you can use it in your own Vessel projects by defining it as part of the vessel init command (it has prompts).

I’ve been really enjoying it. There’s still some sharp edges, but give it a spin!